The CRC calculation I am doing on the.hex file has the same algorithm as the HW CRC on the STM32 (I have checked both on a example data in the debug mode and the output was the same). First, I have successfully uploaded the.hex file (without CRC check) to the controller and the code works fine. Intel hexadecimal object file format, Intel hex format or Intellec Hex is a file format that conveys binary information in ASCII text form. It is commonly used for programming microcontrollers, EPROMs, and other types of programmable logic devices.In a typical application, a compiler or assembler converts a program's source code (such as in C or assembly language) to machine code and outputs.

Introduction on CRC calculations

Whenever digital data is stored or interfaced, data corruption might occur. Since the beginning of computer science, people have been thinking of ways to deal with this type of problem. For serial data they came up with the solution to attach a parity bit to each sent byte. This simple detection mechanism works if an odd number of bits in a byte changes, but an even number of false bits in one byte will not be detected by the parity check. To overcome this problem people have searched for mathematical sound mechanisms to detect multiple false bits. The CRC calculation or cyclic redundancy check was the result of this. Nowadays CRC calculations are used in all types of communications. All packets sent over a network connection are checked with a CRC. Also each data block on your hard-disk has a CRC value attached to it. Modern computer world cannot do without these CRC calculation. So let's see why they are so widely used. The answer is simple, they are powerful, detect many types of errors and are extremely fast to calculate especially when dedicated hardware chips are used.

One might think, that using a checksum can replace proper CRC calculations. It is certainly easier to calculate a checksum, but checksums do not find all errors. Lets take an example string and calculate a one byte checksum. The example string is 'Lammert' which converts to the ASCII values [ 76, 97, 109, 109, 101, 114, 116 ]. The one byte checksum of this array can be calculated by adding all values, than dividing it by 256 and keeping the remainder. The resulting checksum is 210. You can use the calculator above to check this result.

In this example we have used a one byte long checksum which gives us 256 different values. Using a two byte checksum will result in 65,536 possible different checksum values and when a four byte value is used there are more than four billion possible values. We might conclude that with a four byte checksum the chance that we accidentally do not detect an error is less than 1 to 4 billion. Seems rather good, but this is only theory. In practice, bits do not change purely random during communications. They often fail in bursts, or due to electrical spikes. Let us assume that in our example array the lowest significant bit of the character ‘L‘ is set, and the lowest significant bit of character ‘a‘ is lost during communication. The receiver will than see the array [ 77, 96, 109, 109, 101, 114, 116 ] representing the string 'M`mmert'. The checksum for this new string is still 210, but the result is obviously wrong, only after two bits changed. Even if we had used a four byte long checksum we would not have detected this transmission error. So calculating a checksum may be a simple method for detecting errors, but doesn't give much more protection than the parity bit, independent of the length of the checksum.

The idea behind a check value calculation is simple. Use a function F(bval,cval) that inputs one data byte and a check value and outputs a recalculated check value. In fact checksum calculations as described above can be defined in this way. Our one byte checksum example could have been calculated with the following function (in C language) that we call repeatedly for each byte in the input string. The initial value for cval is 0.

The idea behind CRC calculation is to look at the data as one large binary number. This number is divided by a certain value and the remainder of the calculation is called the CRC. Dividing in the CRC calculation at first looks to cost a lot of computing power, but it can be performed very quickly if we use a method similar to the one learned at school. We will as an example calculate the remainder for the character ‘m‘—which is 1101101 in binary notation—by dividing it by 19 or 10011. Please note that 19 is an odd number. This is necessary as we will see further on. Please refer to your schoolbooks as the binary calculation method here is not very different from the decimal method you learned when you were young. It might only look a little bit strange. Also notations differ between countries, but the method is similar.

With decimal calculations you can quickly check that 109 divided by 19 gives a quotient of 5 with 14 as the remainder. But what we also see in the scheme is that every bit extra to check only costs one binary comparison and in 50% of the cases one binary subtraction. You can easily increase the number of bits of the test data string—for example to 56 bits if we use our example value 'Lammert'—and the result can be calculated with 56 binary comparisons and an average of 28 binary subtractions. This can be implemented in hardware directly with only very few transistors involved. Also software algorithms can be very efficient.

For CRC calculations, no normal subtraction is used, but all calculations are done modulo 2. In that situation you ignore carry bits and in effect the subtraction will be equal to an exclusive or operation. This looks strange, the resulting remainder has a different value, but from an algebraic point of view the functionality is equal. A discussion of this would need university level knowledge of algebraic field theory and I guess most of the readers are not interested in this. Please look at the end of this document for books that discuss this in detail.

Now we have a CRC calculation method which is implementable in both hardware and software and also has a more random feeling than calculating an ordinary checksum. But how will it perform in practice when one ore more bits are wrong? If we choose the divisor—19 in our example—to be an odd number, you don't need high level mathematics to see that every single bit error will be detected. This is because every single bit error will let the dividend change with a power of 2. If for example bit n changes from 0 to 1, the value of the dividend will increase with 2n. If on the other hand bit n changes from 1 to 0, the value of the dividend will decrease with 2n. Because you can't divide any power of two by an odd number, the remainder of the CRC calculation will change and the error will not go unnoticed.

Hex Checksum Calculator

Peavey foundation serial number lookup. The second situation we want to detect is when two single bits change in the data. This requires some mathematics which can be read in Tanenbaum's book mentioned below. Sd2vita tutorial for mac. You need to select your divisor very carefully to be sure that independent of the distance between the two wrong bits you will always detect them. It is known, that the commonly used values 0x8005 and 0x1021 of the CRC16 and CRC-CCITT calculations perform very good at this issue. Please note that other values might or might not, and you cannot easily calculate which divisor value is appropriate for detecting two bit errors and which isn't. Rely on extensive mathematical research on this issue done some decades ago by highly skilled mathematicians and use the values these people obtained.

Introduction on CRC calculations

Whenever digital data is stored or interfaced, data corruption might occur. Since the beginning of computer science, people have been thinking of ways to deal with this type of problem. For serial data they came up with the solution to attach a parity bit to each sent byte. This simple detection mechanism works if an odd number of bits in a byte changes, but an even number of false bits in one byte will not be detected by the parity check. To overcome this problem people have searched for mathematical sound mechanisms to detect multiple false bits. The CRC calculation or cyclic redundancy check was the result of this. Nowadays CRC calculations are used in all types of communications. All packets sent over a network connection are checked with a CRC. Also each data block on your hard-disk has a CRC value attached to it. Modern computer world cannot do without these CRC calculation. So let's see why they are so widely used. The answer is simple, they are powerful, detect many types of errors and are extremely fast to calculate especially when dedicated hardware chips are used.

One might think, that using a checksum can replace proper CRC calculations. It is certainly easier to calculate a checksum, but checksums do not find all errors. Lets take an example string and calculate a one byte checksum. The example string is 'Lammert' which converts to the ASCII values [ 76, 97, 109, 109, 101, 114, 116 ]. The one byte checksum of this array can be calculated by adding all values, than dividing it by 256 and keeping the remainder. The resulting checksum is 210. You can use the calculator above to check this result.

In this example we have used a one byte long checksum which gives us 256 different values. Using a two byte checksum will result in 65,536 possible different checksum values and when a four byte value is used there are more than four billion possible values. We might conclude that with a four byte checksum the chance that we accidentally do not detect an error is less than 1 to 4 billion. Seems rather good, but this is only theory. In practice, bits do not change purely random during communications. They often fail in bursts, or due to electrical spikes. Let us assume that in our example array the lowest significant bit of the character ‘L‘ is set, and the lowest significant bit of character ‘a‘ is lost during communication. The receiver will than see the array [ 77, 96, 109, 109, 101, 114, 116 ] representing the string 'M`mmert'. The checksum for this new string is still 210, but the result is obviously wrong, only after two bits changed. Even if we had used a four byte long checksum we would not have detected this transmission error. So calculating a checksum may be a simple method for detecting errors, but doesn't give much more protection than the parity bit, independent of the length of the checksum.

The idea behind a check value calculation is simple. Use a function F(bval,cval) that inputs one data byte and a check value and outputs a recalculated check value. In fact checksum calculations as described above can be defined in this way. Our one byte checksum example could have been calculated with the following function (in C language) that we call repeatedly for each byte in the input string. The initial value for cval is 0.

The idea behind CRC calculation is to look at the data as one large binary number. This number is divided by a certain value and the remainder of the calculation is called the CRC. Dividing in the CRC calculation at first looks to cost a lot of computing power, but it can be performed very quickly if we use a method similar to the one learned at school. We will as an example calculate the remainder for the character ‘m‘—which is 1101101 in binary notation—by dividing it by 19 or 10011. Please note that 19 is an odd number. This is necessary as we will see further on. Please refer to your schoolbooks as the binary calculation method here is not very different from the decimal method you learned when you were young. It might only look a little bit strange. Also notations differ between countries, but the method is similar.

With decimal calculations you can quickly check that 109 divided by 19 gives a quotient of 5 with 14 as the remainder. But what we also see in the scheme is that every bit extra to check only costs one binary comparison and in 50% of the cases one binary subtraction. You can easily increase the number of bits of the test data string—for example to 56 bits if we use our example value 'Lammert'—and the result can be calculated with 56 binary comparisons and an average of 28 binary subtractions. This can be implemented in hardware directly with only very few transistors involved. Also software algorithms can be very efficient.

For CRC calculations, no normal subtraction is used, but all calculations are done modulo 2. In that situation you ignore carry bits and in effect the subtraction will be equal to an exclusive or operation. This looks strange, the resulting remainder has a different value, but from an algebraic point of view the functionality is equal. A discussion of this would need university level knowledge of algebraic field theory and I guess most of the readers are not interested in this. Please look at the end of this document for books that discuss this in detail.

Now we have a CRC calculation method which is implementable in both hardware and software and also has a more random feeling than calculating an ordinary checksum. But how will it perform in practice when one ore more bits are wrong? If we choose the divisor—19 in our example—to be an odd number, you don't need high level mathematics to see that every single bit error will be detected. This is because every single bit error will let the dividend change with a power of 2. If for example bit n changes from 0 to 1, the value of the dividend will increase with 2n. If on the other hand bit n changes from 1 to 0, the value of the dividend will decrease with 2n. Because you can't divide any power of two by an odd number, the remainder of the CRC calculation will change and the error will not go unnoticed.

Hex Checksum Calculator

Peavey foundation serial number lookup. The second situation we want to detect is when two single bits change in the data. This requires some mathematics which can be read in Tanenbaum's book mentioned below. Sd2vita tutorial for mac. You need to select your divisor very carefully to be sure that independent of the distance between the two wrong bits you will always detect them. It is known, that the commonly used values 0x8005 and 0x1021 of the CRC16 and CRC-CCITT calculations perform very good at this issue. Please note that other values might or might not, and you cannot easily calculate which divisor value is appropriate for detecting two bit errors and which isn't. Rely on extensive mathematical research on this issue done some decades ago by highly skilled mathematicians and use the values these people obtained.

Furthermore, with our CRC calculation we want to detect all errors where an odd number of bit changes. This can be achieved by using a divisor with an even number of bits set. Using modulo 2 mathematics you can show that all errors with an odd number of bits are detected. As I have said before, in modulo 2 mathematics the subtraction function is replaced by the exclusive or. There are four possible XOR operations.

We see that for all combinations of bit values, the oddness of the expression remains the same. When choosing a divisor with an even number of bits set, the oddness of the remainder is equal to the oddness of the dividend. Therefore, if the oddness of the dividend changes because an odd number of bits changes, the remainder will also change. So all errors which change an odd number of bits will be detected by a CRC calculation which is performed with such a divisor. You might have seen that the commonly used divisor values 0x8005 and 0x1021 actually have an odd number of bits, and not even as stated here. This is because inside the algorithm there is a 'hidden' extra bit 216 which makes the actual used divisor value 0x18005 and 0x11021 inside the algorithm.

Last but not least we want to detect all burst errors with our CRC calculation with a maximum length to be detected, and all longer burst errors to be detected with a high probability. A burst error is quite common in communications. It is the type of error that occurs because of lightning, relay switching, etc. where during a small period all bits are set to one. To really understand this you also need to have some knowledge of modulo 2 algebra, so please accept that with a 16 bit divisor you will be able to detect all bursts with a maximum length of 16 bits, and all longer bursts with at least 99.997% certainty.

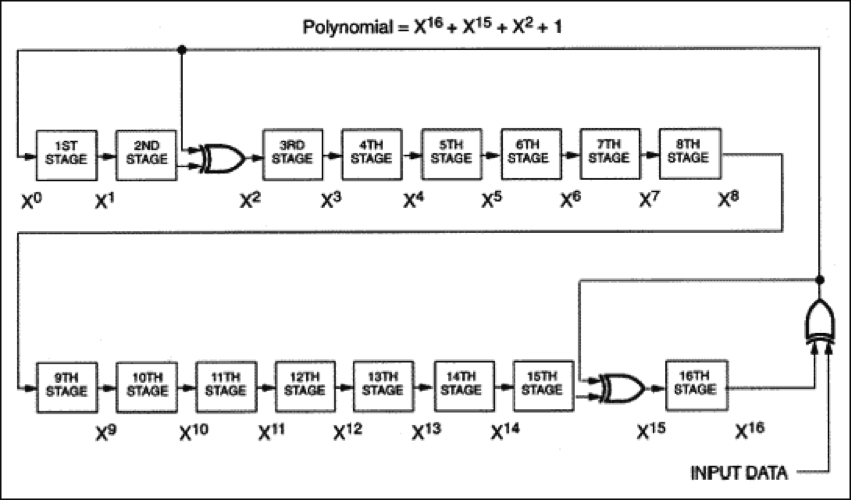

In a pure mathematical approach, CRC calculation is written down as polynomial calculations. The divisor value is most often not described as a binary number, but a polynomial of certain order. In normal life some polynomials are used more often than others. The three used in the on-line CRC calculation on this page are the 16 bit wide CRC16 and CRC-CCITT and the 32 bits wide CRC32. The latter is probably most used now, because among others it is the CRC generator for all network traffic verification and validation.

For all three types of CRC calculations I have a free software library available. The test program can be used directly to test files or strings. You can also look at the source codes and integrate these CRC routines in your own program. Please be aware of the initialization values of the CRC calculation and possible necessary post-processing like flipping bits. If you don't do this you might get different results than other CRC implementations. All this pre and post-processing is done in the example program so it should be not to difficult to make your own implementation working. A common used test is to calculate the CRC value for the ASCII string '123456789'. If the outcome of your routine matches the outcome of the test program or the outcome on this website, your implementation is working and compatible with most other implementations.

Just as a reference the polynomial functions for the most common CRC calculations. Please remember that the highest order term of the polynomial (x16 or x32) is not present in the binary number representation, but implied by the algorithm itself.

| Polynomial functions for common CRC's | ||

|---|---|---|

| CRC-16 | 0x8005 | x16 + x15 + x2 + 1 |

| CRC-CCITT | 0x1021 | x16 + x12 + x5 + 1 |

| CRC-DNP | 0x3D65 | x16 + x13 + x12 + x11 + x10 + x8 + x6 + x5 + x2 + 1 |

| CRC-32 | 0x04C11DB7 | x32 + x26 + x23 + x22 + x16 + x12 + x11 + x10 + x8 + x7 + x5 + x4 + x2 + x1 + 1 |

| Literature | ||

| 2002 | Computer Networks, describing common network systems and the theory and algorithms behind their implementation. | Andrew S. Tanenbaum |

| various | The Art of Computer Programming is the main reference for semi-numerical algorithms. Polynomial calculations are described in depth. Some level of mathematics is necessary to fully understand it though. | Donald E. Knuth |

| – | DNP 3.0, or distributed network protocol is a communication protocol designed for use between substation computers, RTUs remote terminal units, IEDs intelligent electronic devices and master stations for the electric utility industry. It is now also used in familiar industries like waste water treatment, transportation and the oil and gas industry. | DNP User Group |

Hex File Crc

Description

pycrc is a CRC reference implementation in Python and a C source code generator for parametrised CRC models. The generated C source code can be optimised for simplicity, speed or small memory footprint, as required on small embedded systems. The following operations are implemented:

calculate the checksum of a string (ASCII or hex)

calculate the checksum of a file

generate the header and source files for a C implementation.

pycrc supports the following variants of the CRC algorithm:

bit-by-bitorbbb: the basic algorithm which operates individually on every bit of the augmented message (i.e. the input data withWidthzero bits added at the end). This algorithm is a straightforward implementation of the basic polynomial division and is the easiest one to understand, but it is also the slowest one among all possible variants.bit-by-bit-fastorbbf: a variation of the simplebit-by-bitalgorithm. This algorithm still iterates over every bit of the message, but does not augment it (does not addWidthzero bits at the end). It gives the same result as thebit-by-bitmethod by carefully choosing the initial value of the algorithm. This method might be a good choice for embedded platforms, where code space is more important than execution speed.table-drivenortbl: the standard table driven algorithm. This is the fastest variant because it operates on one byte at a time, as opposed to one bit at the time. This method uses a look-up table (usually of 256 elements), which might not be acceptable for small embedded systems. The number of elements in the look-up table can be reduced with the--table-idx-widthcommand line switch. The value of 4 bits for the table index (16 elements in the look-up table) can be a good compromise between execution speed and code size.The

--slice-byoption enables a variant of thetable-drivenalgorithm that operates on 32 bits of data or more at a time rather than 8 bits. This can dramatically speed-up the calculation of the CRC, at the cost of increased code and data size. Note: this option is experimental and not well-tested. Check your results and please raise bugs if you find problems.